Publications

2025

-

The Effect of Visual Depth on the Vergence-Accommodation Conflict on 3D Selection Performance within Virtual Reality HeadsetsVisual Computer, May 2025

The Effect of Visual Depth on the Vergence-Accommodation Conflict on 3D Selection Performance within Virtual Reality HeadsetsVisual Computer, May 2025Prior studies have shown that the vergence–accommodation conflict negatively affects the interaction performance in virtual reality (VR) and augmented reality (AR) systems, particularly as object depth increases. This paper examines user selection performance across six different visual depths. Through a study closely resembling prior research, eighteen participants participated in an ISO 9241:411 task with six different depth distances. We observed that with higher depth values, selection times increased, while the throughput performance of the participants decreased. Based on this finding, we propose a Fitts’ law model based on the focal distances, which models pointing times in VR and AR systems with substantially higher accuracy. We hope that our findings aid developers in creating 3D user interfaces for VR and AR that offer better performance and an improved user experience.

@article{bashar2025depthvac, title = {The Effect of Visual Depth on the Vergence-Accommodation Conflict on 3D Selection Performance within Virtual Reality Headsets}, author = {Bashar, Mohammad Raihanul and Barrera Machuca, Mayra Donaji and Stuerzlinger, Wolfgang and Batmaz, Anil Ufuk}, journal = {Visual Computer}, publisher = {Springer}, keywords = {Virtual Reality, Vergence-Accommodation Conflict, Stereo Deficiencies, Distal Pointing, Ray Casting, Selection, Fitts’ Law}, issn = {1432-2315}, year = {2025}, month = may, numpages = {17}, doi = {https://doi.org/10.1007/s00371-025-03990-x}, bibtex_show = true, dimensions = {true}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598102/vac_model_sq4ma4.gif, } -

There Is More to Dwell Than Meets the Eye: Toward Better Gaze-Based Text Entry Systems With Multi-Threshold DwellAunnoy K Mutasim, Mohammad Raihanul Bashar, Christof Lutteroth, Anil Ufuk Batmaz, and Wolfgang StuerzlingerIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Tokyo, Japan, May 2025

There Is More to Dwell Than Meets the Eye: Toward Better Gaze-Based Text Entry Systems With Multi-Threshold DwellAunnoy K Mutasim, Mohammad Raihanul Bashar, Christof Lutteroth, Anil Ufuk Batmaz, and Wolfgang StuerzlingerIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Tokyo, Japan, May 2025Dwell-based text entry seems to peak at 20 words per minute (WPM). Yet, little is known about the factors contributing to this limit, except that it requires extensive training. Thus, we conducted a longitudinal study, broke the overall dwell-based selection time into six different components, and identified several design challenges and opportunities. Subsequently, we designed two novel dwell keyboards that use multiple yet much shorter dwell thresholds: Dual-Threshold Dwell (DTD) and Multi-Threshold Dwell (MTD). The performance analysis showed that MTD (18.3 WPM) outperformed both DTD (15.3 WPM) and the conventional Constant-Threshold Dwell (12.9 WPM). Notably, absolute novices achieved these speeds within just 30 phrases. Moreover, MTD’s performance is also the fastest-ever reported average text entry speed for gaze-based keyboards. Finally, we discuss how our chosen parameters can be further optimized to pave the way toward more efficient dwell-based text entry.

@inproceedings{10.1145/3706598.3713781, author = {Mutasim, Aunnoy K and Bashar, Mohammad Raihanul and Lutteroth, Christof and Batmaz, Anil Ufuk and Stuerzlinger, Wolfgang}, title = {There Is More to Dwell Than Meets the Eye: Toward Better Gaze-Based Text Entry Systems With Multi-Threshold Dwell}, year = {2025}, isbn = {9798400713941}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3706598.3713781}, doi = {10.1145/3706598.3713781}, booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems}, articleno = {1088}, numpages = {18}, keywords = {Text Entry, Eye Gaze, Eye-Tracking, Dwell Thresholds, Learnability, QWERTY}, bibtex_show = true, dimensions = {true}, location = {Yokohama, Tokyo, Japan}, series = {CHI '25}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598100/chi_dwell_kaberp.gif, } -

Depth3DSketch: Freehand Sketching Out of Arm’s Reach in Virtual RealityMohammad Raihanul Bashar, Mohammadreza Amini, Wolfgang Stuerzlinger, Mine Sarac, Ken Pfeuffer, Mayra Donaji Barrera Machuca, and Anil Ufuk BatmazIn Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Yokohama, Tokyo, Japan, May 2025

Depth3DSketch: Freehand Sketching Out of Arm’s Reach in Virtual RealityMohammad Raihanul Bashar, Mohammadreza Amini, Wolfgang Stuerzlinger, Mine Sarac, Ken Pfeuffer, Mayra Donaji Barrera Machuca, and Anil Ufuk BatmazIn Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Yokohama, Tokyo, Japan, May 2025Due to the increasing availability and popularity of virtual reality (VR) systems, 3D sketching applications have also boomed. Most of these applications focus on peripersonal sketching, e.g., within arm’s reach. Yet, sketching in larger scenes requires users to walk around the virtual environment while sketching or to change the sketch scale repeatedly. This paper presents Depth3DSketch, a 3D sketching technique that allows users to sketch objects up to 2.5 m away with a freehand sketching technique. Users can select the sketching depth with three interaction methods: using the joystick on a single controller, the intersection from two controllers, or the intersection from the controller ray and the user’s gaze. We compared these interaction methods in a user study. Results show that users preferred the joystick to select visual depth, but there was no difference in user accuracy or sketching time between the three methods.

@inproceedings{10.1145/3706599.3719717, author = {Bashar, Mohammad Raihanul and Amini, Mohammadreza and Stuerzlinger, Wolfgang and Sarac, Mine and Pfeuffer, Ken and Barrera Machuca, Mayra Donaji and Batmaz, Anil Ufuk}, title = {Depth3DSketch: Freehand Sketching Out of Arm's Reach in Virtual Reality}, year = {2025}, isbn = {9798400713958}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3706599.3719717}, doi = {10.1145/3706599.3719717}, booktitle = {Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems}, articleno = {175}, numpages = {8}, keywords = {3D Sketching, Eye-Gaze, VR, 3D User Interface, Multimodal}, bibtex_show = true, dimensions = {true}, location = {Yokohama, Tokyo, Japan}, series = {CHI EA '25}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598099/chi_depth3dsketch_f1hs5y.gif, } -

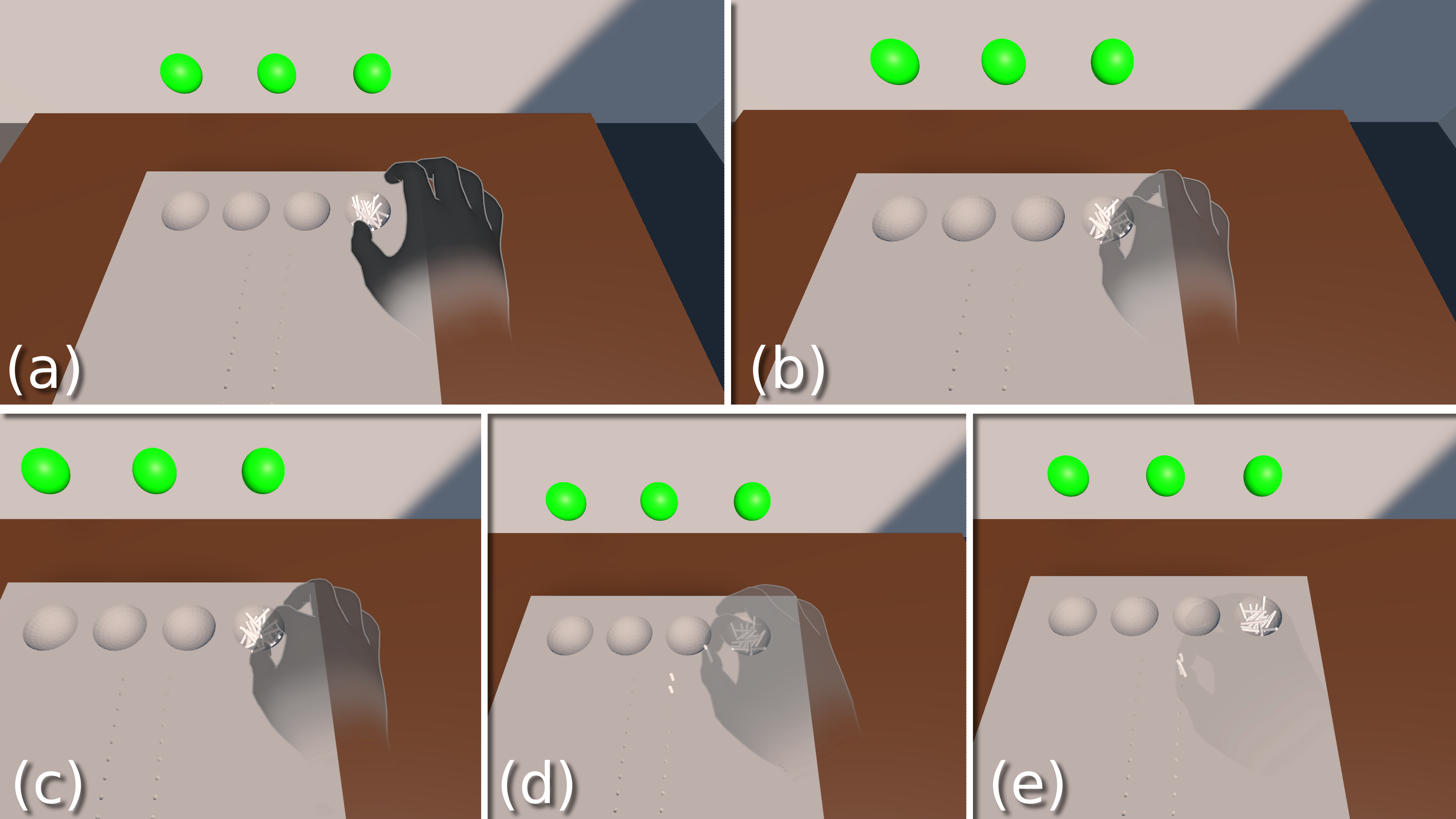

An Early Warning System Based on Visual Feedback for Light-Based Hand Tracking Failures in VR Head-Mounted DisplaysMohammad Raihanul Bashar, and Anil Ufuk BatmazIEEE Transactions on Visualization and Computer Graphics, Saint-Malo, Brittany, France, Mar 2025

An Early Warning System Based on Visual Feedback for Light-Based Hand Tracking Failures in VR Head-Mounted DisplaysMohammad Raihanul Bashar, and Anil Ufuk BatmazIEEE Transactions on Visualization and Computer Graphics, Saint-Malo, Brittany, France, Mar 2025State-of-the-art Virtual Reality (VR) Head-Mounted Displays (HMDs) enable users to interact with virtual objects using their hands via built-in camera systems. However, the accuracy of the hand movement detection algorithm is often affected by limitations in both camera hardware and software, including image processing & machine learning algorithms used for hand skeleton detection. In this work, we investigated a visual feedback mechanism to create an early warning system that detects hand skeleton recognition failures in VR HMDs and warns users in advance. We conducted two user studies to evaluate the system’s effectiveness. The first study involved a cup stacking task, where participants stacked virtual cups. In the second study, participants performed a ball sorting task, picking and placing colored balls into corresponding baskets. During both of the studies, we monitored the built-in hand tracking confidence of the VR HMD system and provided visual feedback to the user to warn them when the tracking confidence is ‘low’. The results showed that warning users before the hand tracking algorithm fails improved the system’s usability while reducing frustration. The impact of our results extends beyond VR HMDs, any system that uses hand tracking, such as robotics, can benefit from this approach.

@article{10918865, author = {Bashar, Mohammad Raihanul and Batmaz, Anil Ufuk}, journal = {IEEE Transactions on Visualization and Computer Graphics}, title = {An Early Warning System Based on Visual Feedback for Light-Based Hand Tracking Failures in VR Head-Mounted Displays}, year = {2025}, volume = {}, number = {}, pages = {1-11}, keywords = {Hands;Tracking;Visualization;Virtual environments;Cameras;Real-time systems;Usability;Alarm systems;Accuracy;User experience;Hand Tracking;Virtual Reality;Visual Feedback;System Usability;Early warning}, doi = {10.1109/TVCG.2025.3549544}, issn = {1941-0506}, month = mar, bibtex_show = true, dimensions = {true}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751596636/with_ball_w3fhia.gif, location = {Saint-Malo, Brittany, France}, }

2024

-

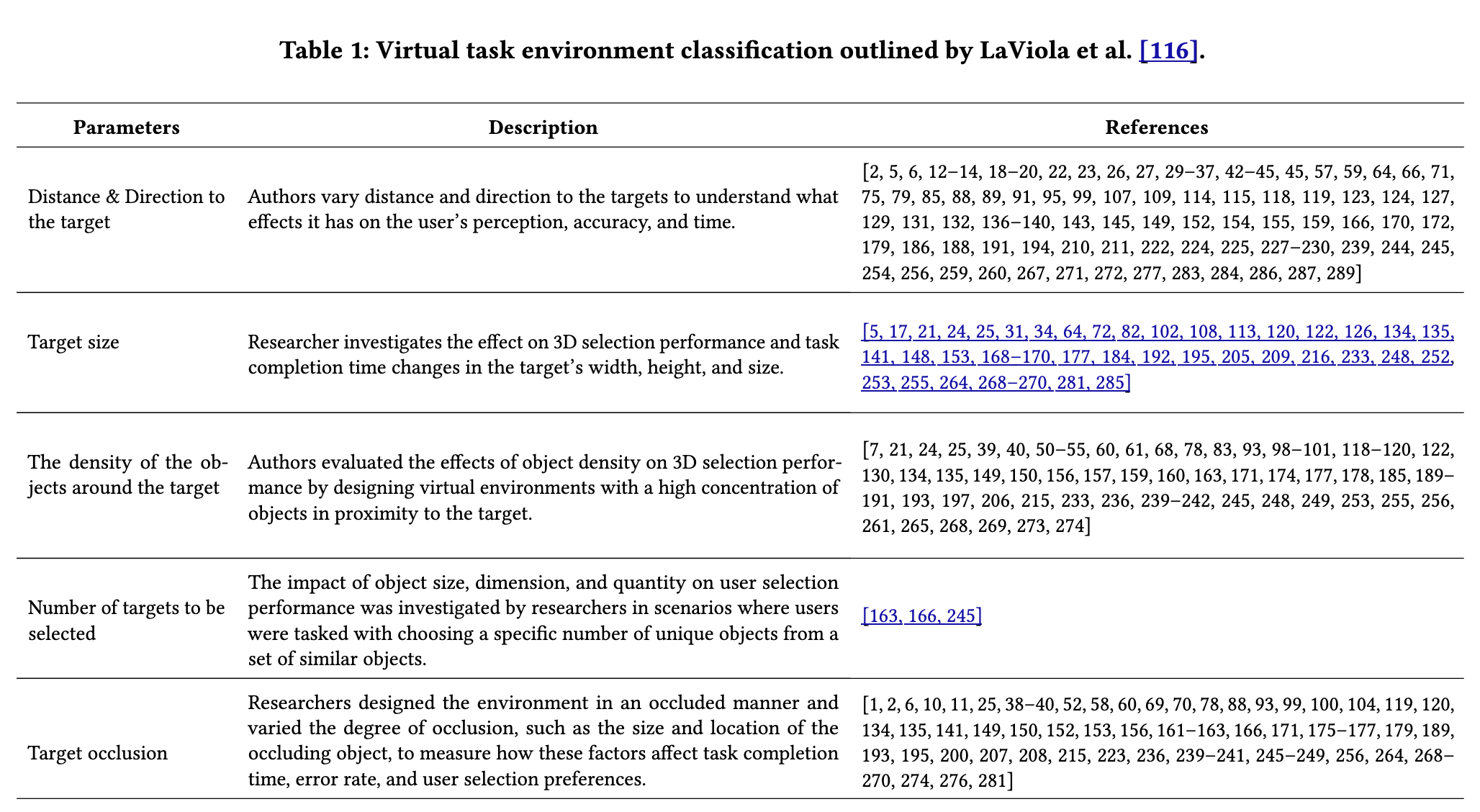

Virtual Task Environments Factors Explored in 3D Selection StudiesMohammad Raihanul Bashar, and Anil Ufuk BatmazIn Proceedings of the 50th Graphics Interface Conference, Halifax, NS, Canada, Mar 2024

Virtual Task Environments Factors Explored in 3D Selection StudiesMohammad Raihanul Bashar, and Anil Ufuk BatmazIn Proceedings of the 50th Graphics Interface Conference, Halifax, NS, Canada, Mar 2024In recent years, there has been a race among researchers, developers, engineers, and designers to come up with new interaction techniques for enhancing the performance and experience of users while interacting with virtual environments, and a key component of a 3D interaction technique is the selection technique. In this paper, we explore the environmental factors used in the assessment of 3D selection methods and classify each factor based on the task environment. Our approach consists of a thorough literature collection process, including four major Human-Computer Interaction repositories—Scopus, Science Direct, IEEE Xplore, and ACM Digital Library and created a dataset of a total of 277 papers. Drawing inspiration from the parameters outlined by LaViola et al. we manually classified each of those papers based on the task environment described in the papers. In addition, we explore the methodologies used in recent user studies to assess interaction techniques within various task environments, providing valuable insights into the developing landscape of virtual interaction research. We hope that the outcomes of our paper serve as a valuable resource for researchers, developers, and designers, providing a deeper understanding of task environments and offering fresh perspectives to evaluate their proposed 3D selection techniques in virtual environments.

@inproceedings{10.1145/3670947.3670983, author = {Bashar, Mohammad Raihanul and Batmaz, Anil Ufuk}, title = {Virtual Task Environments Factors Explored in 3D Selection Studies}, year = {2024}, isbn = {9798400718281}, publisher = {Association for Computing Machinery}, bibtex_show = true, dimensions = {true}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3670947.3670983}, doi = {10.1145/3670947.3670983}, booktitle = {Proceedings of the 50th Graphics Interface Conference}, articleno = {10}, numpages = {16}, keywords = {3D Selection, 3D User Interfaces, Augmented Reality, Human-centered computing, Virtual Reality, Virtual Task Environment}, location = {Halifax, NS, Canada}, series = {GI '24}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751596332/gi_24_uhr206.png, } -

Subtask-Based Virtual Hand Visualization Method for Enhanced User Accuracy in Virtual Reality EnvironmentsLaurent Voisard, Amal Hatira, Mohammad Raihanul Bashar, Mucahit Gemici, Mine Sarac, Marta Kereten-Oertel, and Anil Ufuk BatmazIn 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Mar 2024

Subtask-Based Virtual Hand Visualization Method for Enhanced User Accuracy in Virtual Reality EnvironmentsLaurent Voisard, Amal Hatira, Mohammad Raihanul Bashar, Mucahit Gemici, Mine Sarac, Marta Kereten-Oertel, and Anil Ufuk BatmazIn 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Mar 2024In the virtual hand interaction techniques, the opacity of the virtual hand avatar can potentially obstruct users’ visual feedback, leading to detrimental effects on accuracy and cognitive load. Given that the cognitive load is related to gaze movements, our study focuses on analyzing the gaze movements of participants across opaque, transparent, and invisible hand visualizations in order to create a new interaction technique. For our experimental setup, we used a Purdue Pegboard Test with reaching, grasping, transporting, and inserting subtasks. We examined how long and where participants concentrated on these subtasks and, using the findings, introduced a new virtual hand visualization method to increase accuracy. We hope that our results can be used in future virtual reality applications where users have to interact with virtual objects accurately.

@inproceedings{voisard2024subtask, title = {Subtask-Based Virtual Hand Visualization Method for Enhanced User Accuracy in Virtual Reality Environments}, author = {Voisard, Laurent and Hatira, Amal and Bashar, Mohammad Raihanul and Gemici, Mucahit and Sarac, Mine and Kereten-Oertel, Marta and Batmaz, Anil Ufuk}, booktitle = {2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)}, pages = {6--11}, year = {2024}, doi = {10.1109/VRW62533.2024.00008}, organization = {IEEE}, bibtex_show = true, dimensions = {true}, copyright = {All rights reserved}, langid = {english}, keywords = {Human-centered computing, Visualization, Visualization techniques, Visualization design and evaluation methods}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598101/nidit_2024_eaitf6.png, }

2023

-

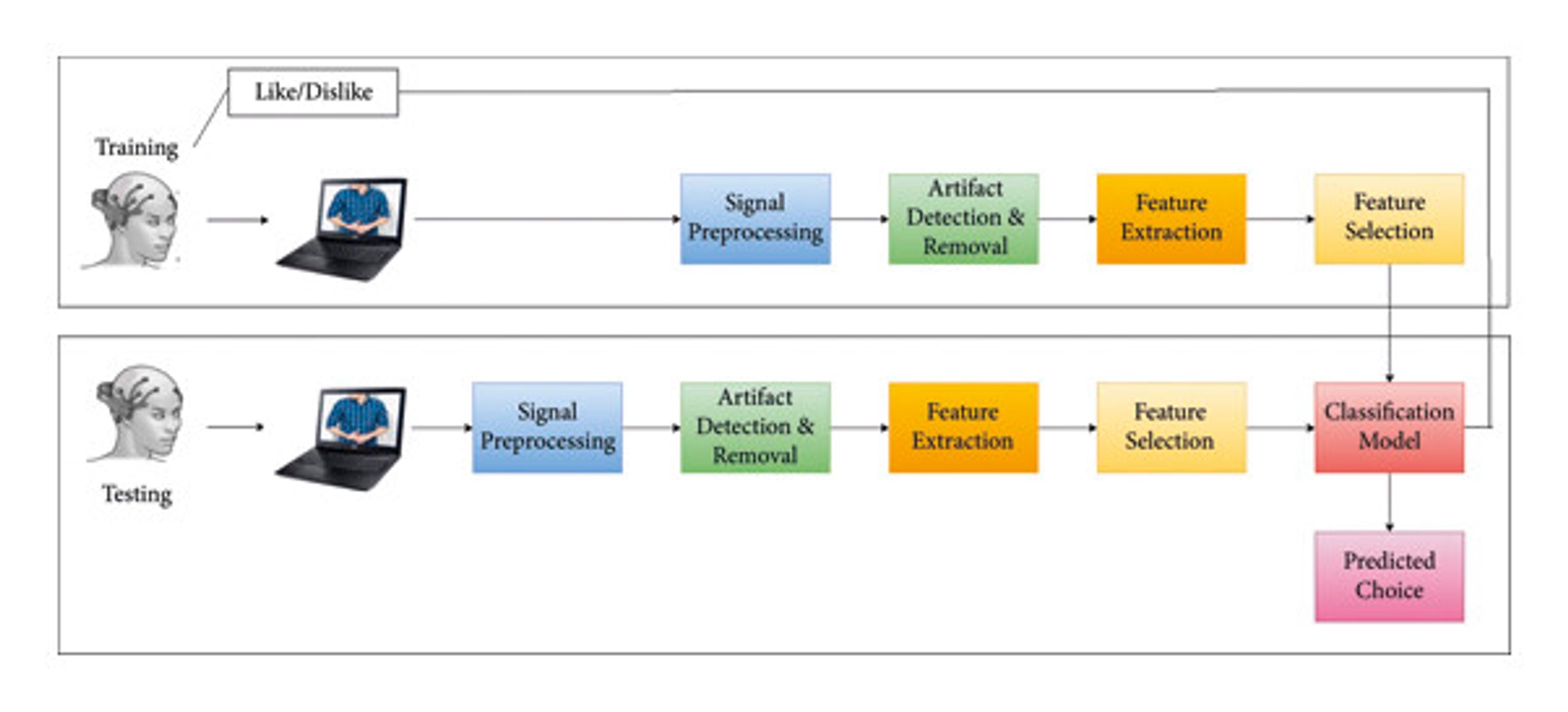

EEG-Based Preference Classification for Neuromarketing ApplicationInjamamul Haque Sourov, Faiyaz Alvi Ahmed, Md Tawhid Islam Opu, Aunnoy K Mutasim, M Raihanul Bashar, Rayhan Sardar Tipu, Md Ashraful Amin, and Md Kafiul IslamComputational Intelligence and Neuroscience, Mar 2023

EEG-Based Preference Classification for Neuromarketing ApplicationInjamamul Haque Sourov, Faiyaz Alvi Ahmed, Md Tawhid Islam Opu, Aunnoy K Mutasim, M Raihanul Bashar, Rayhan Sardar Tipu, Md Ashraful Amin, and Md Kafiul IslamComputational Intelligence and Neuroscience, Mar 2023Neuromarketing is a modern marketing research technique whereby consumers’ behavior is analyzed using neuroscientific approaches. In this work, an EEG database of consumers’ responses to image advertisements was created, processed, and studied with the goal of building predictive models that can classify the consumers’ preference based on their EEG data. Several types of analysis were performed using three classifier algorithms, namely, SVM, KNN, and NN pattern recognition. The maximum accuracy and sensitivity values are reported to be 75.7% and 95.8%, respectively, for the female subjects and the KNN classifier. In addition, the frontal region electrodes yielded the best selective channel performance. Finally, conforming to the obtained results, the KNN classifier is deemed best for preference classification problems. The newly created dataset and the results derived from it will help research communities conduct further studies in neuromarketing.

@article{sourov2023eeg, title = {EEG-Based Preference Classification for Neuromarketing Application}, author = {Sourov, Injamamul Haque and Ahmed, Faiyaz Alvi and Opu, Md Tawhid Islam and Mutasim, Aunnoy K and Bashar, M Raihanul and Tipu, Rayhan Sardar and Amin, Md Ashraful and Islam, Md Kafiul}, journal = {Computational Intelligence and Neuroscience}, volume = {2023}, number = {1}, pages = {4994751}, year = {2023}, doi = {10.1155/2023/4994751}, bibtex_show = true, publisher = {Wiley Online Library}, dimensions = {true}, copyright = {All rights reserved}, langid = {english}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598100/neuro_bplgre.jpg, }

2020

-

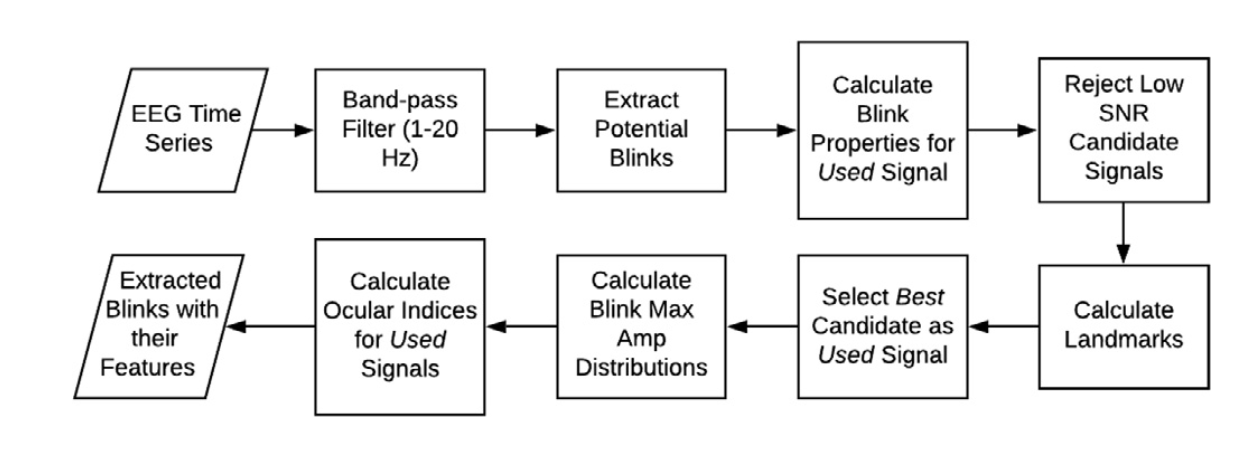

Use of spontaneous blinking for application in human authenticationAmir Jalilifard, Dehua Chen, Aunnoy K Mutasim, M Raihanul Bashar, Rayhan Sardar Tipu, Ahsan-Ul Kabir Shawon, Nazmus Sakib, M Ashraful Amin, and Md Kafiul IslamEngineering Science and Technology, an International Journal, Mar 2020

Use of spontaneous blinking for application in human authenticationAmir Jalilifard, Dehua Chen, Aunnoy K Mutasim, M Raihanul Bashar, Rayhan Sardar Tipu, Ahsan-Ul Kabir Shawon, Nazmus Sakib, M Ashraful Amin, and Md Kafiul IslamEngineering Science and Technology, an International Journal, Mar 2020Contamination of electroencephalogram (EEG) signals due to natural blinking electrooculogram (EOG) signals is often removed to enhance the quality of EEG signals. This paper discusses the possibility of using solely involuntary blinking signals for human authentication. The EEG data of 46 subjects were recorded while the subject was looking at a sequence of different pictures. During the experiment, the subject was not focused on any kind of blinking task. Having the blink EOG signals separated from EEG, 25 features were extracted and the data were preprocessed in order to handle the corrupt or missing values. Since spontaneous and voluntary blinks have different characteristics in terms of kinematic variables and because the previous studies’ control setup may have altered the type of blink from spontaneous to voluntary, a series of statistical analysis was carried out in order to inspect the changes in the multivariate probability distribution of data compared to the previous studies. Statistical significance shows that it is very likely that the blink features of both voluntary and involuntary blink signal are generated by Gaussian probability density function, although different than voluntary blink, spontaneous blink is not well discriminated with Gaussian. Despite testing several models, none managed to classify the data using only the information of a single spontaneous blink. Thereby, we examined the possibility of learning the patterns of a series of blinks using Gated Recurrent Unit (GRU). Our results show that individuals can be distinguished with up to 98.7% accuracy using only a reasonably short sequence of involuntary blinking signals.

@article{jalilifard2020use, title = {Use of spontaneous blinking for application in human authentication}, author = {Jalilifard, Amir and Chen, Dehua and Mutasim, Aunnoy K and Bashar, M Raihanul and Tipu, Rayhan Sardar and Shawon, Ahsan-Ul Kabir and Sakib, Nazmus and Amin, M Ashraful and Islam, Md Kafiul}, journal = {Engineering Science and Technology, an International Journal}, volume = {23}, number = {4}, pages = {903--910}, year = {2020}, doi = {10.1016/j.jestch.2020.05.007}, bibtex_show = true, publisher = {Elsevier}, copyright = {All rights reserved}, dimensions = {true}, langid = {english}, keywords = {User authentication, Eye blinking, Biometric, Electro-encephalogram, Electro-oculogram, Recurrent Neural Network, RNN, EEG, GRU, EOG}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598100/eyeblink_gf65nz.png, }

2018

-

Effect of Artefact Removal Techniques on EEG Signals for Video Category ClassificationAunnoy K Mutasim, M Raihanul Bashar, Rayhan Sardar Tipu, Md Kafiul Islam, and M Ashraful AminIn 2018 24th International Conference on Pattern Recognition (ICPR), Mar 2018

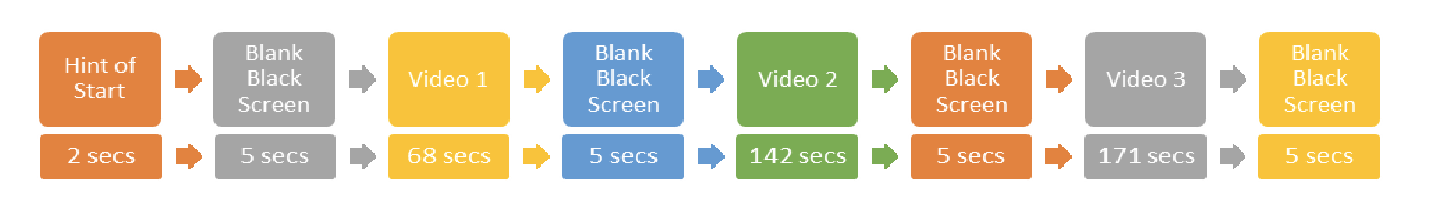

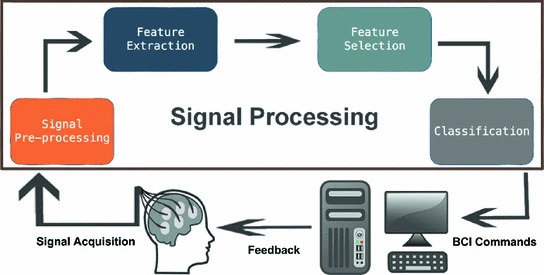

Effect of Artefact Removal Techniques on EEG Signals for Video Category ClassificationAunnoy K Mutasim, M Raihanul Bashar, Rayhan Sardar Tipu, Md Kafiul Islam, and M Ashraful AminIn 2018 24th International Conference on Pattern Recognition (ICPR), Mar 2018Pre-processing, Feature Extraction, Feature Selection and Classification are the four sub modules of the Signal Processing module of a typical BCI system. Pattern recognition is mainly involved in this Signal Processing module and in this paper, we experimented with different state-of-the-art algorithms for each of these submodules on two separate datasets we acquired using Emotiv EPOC and the Muse headband from 38 college-aged young adults. For our experiment, we used two artefact removal techniques, namely Stationary Wavelet Transform (SWT) based denoising technique and an extended SWT technique (SWTSD). We found SWTSD improves average classification accuracy up to 7.2 % and performs better than SWT. However, that does not state that SWTSD will outperform SWT when implemented on other BCI paradigms or on other EEG-based applications. In our study, the highest average accuracy achieved by the data of the Muse headband and Emotiv EPOC were 77.7% and 66.7% respectively and from our results we conclude that, the performance of different BCI systems depends on several different factors including artefact removal techniques, filters, feature extraction and selection algorithms, classifiers, etc. and appropriate choice and usage of such methods can have a significant positive impact on the end results.

@inproceedings{mutasim2018effect, title = {Effect of Artefact Removal Techniques on EEG Signals for Video Category Classification}, author = {Mutasim, Aunnoy K and Bashar, M Raihanul and Tipu, Rayhan Sardar and Islam, Md Kafiul and Amin, M Ashraful}, booktitle = {2018 24th International Conference on Pattern Recognition (ICPR)}, pages = {3513--3518}, year = {2018}, doi = {10.1109/ICPR.2018.8545416}, bibtex_show = true, organization = {IEEE}, copyright = {All rights reserved}, dimensions = {true}, langid = {english}, keywords = {Brain-Computer Interface, BCI, EEG, Video Category Classification, VCC, SVM, SWT, SWTSD}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598100/emg_g3o68r.png, } -

Computational intelligence for pattern recognition in eeg signalsAunnoy K Mutasim, Rayhan Sardar Tipu, M Raihanul Bashar, Md Kafiul Islam, and M Ashraful AminMar 2018

Computational intelligence for pattern recognition in eeg signalsAunnoy K Mutasim, Rayhan Sardar Tipu, M Raihanul Bashar, Md Kafiul Islam, and M Ashraful AminMar 2018Electroencephalography (EEG) captures brain signals from Scalp. If analyzed and patterns are recognized properly this has a high potential application in medicine, psychology, rehabilitation, and many other areas. However, EEG signals are inherently noise-prone, and it is not possible for human to see patterns in raw signals most of the time. Application of appropriate computational intelligence is must to make sense of the raw EEG signals. Moreover, if the signals are collected by a consumer grade wireless EEG acquisition device, the amount of interference is ever more complex to avoid, and it becomes impossible to see any sorts of pattern without proper use of computational intelligence to discover patterns. The objective of EEG based Brain-Computer Interface (BCI) systems is to extract specific signature of the brain activity and to translate them into command signals to control external devices or understand human brains action mechanism to stimuli. A typical BCI system is comprised of a Signal Processing module which can be further broken down into four submodules namely, Pre-processing, Feature Extraction, Feature Selection and Classification. Computational intelligence is the key to identify and extract features also to classify or discover discriminating characteristics in signals. In this chapter we present an overview how computational intelligence is used to discover patterns in brain signals. From our research we conclude that, since EEG signals are the outcome of a highly complex non-linear and non-stationary stochastic biological process which contain a wide variety of noises both from internal and external sources; thus, the use of computational intelligence is required at every step of an EEG-based BCI system starting from removing noises (using advanced signal processing techniques such as SWTSD, ICA, EMD, other than traditional filtering by identifying/exploiting different artifact/noise characteristics/patterns) through feature extraction and selection (by using unsupervised learning like PCA, SVD, etc.) and finally to classification (either supervised learning based classifier like SVM, probabilistic classifier like NB or unsupervised learning based classifiers like neural networks namely RBF, MLP, DBN, k-NN, etc.). And the usage of appropriate computational intelligence significantly improves the end results.

@book{mutasim2018computational, title = {Computational intelligence for pattern recognition in eeg signals}, author = {Mutasim, Aunnoy K and Tipu, Rayhan Sardar and Bashar, M Raihanul and Islam, Md Kafiul and Amin, M Ashraful}, journal = {Computational Intelligence for Pattern Recognition}, pages = {291--320}, year = {2018}, doi = {10.1007/978-3-319-89629-8_11}, publisher = {Springer}, bibtex_show = true, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598098/book_eg5jeq.jpg, copyright = {All rights reserved}, dimensions = {true}, langid = {english}, keywords = {Computational intelligence, Pattern recognition, EEG, BCI, SWT, SWTSD, PCA, LDA, SVD, Neural networks, Deep belief network, CNN, ERP, FFT, NB, Motor imagery, SVM, VCC}, }

2017

-

2D surface mapping for mine detection using wireless networkM Raihanul Bashar, Abul Al Arabi, Rayhan Sardar Tipu, M Tanvir Alam Sifat, M Zobair Ibn Alam, and M Ashraful AminIn 2017 2nd International Conference on Control and Robotics Engineering (ICCRE), Mar 2017

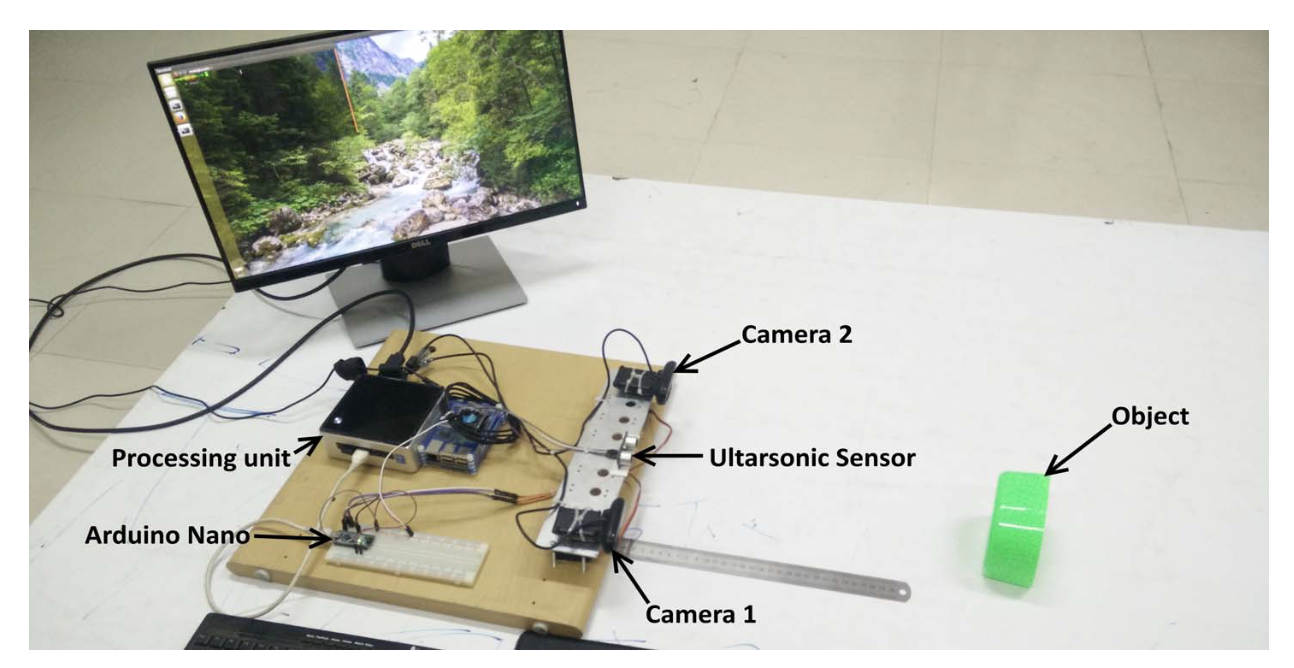

2D surface mapping for mine detection using wireless networkM Raihanul Bashar, Abul Al Arabi, Rayhan Sardar Tipu, M Tanvir Alam Sifat, M Zobair Ibn Alam, and M Ashraful AminIn 2017 2nd International Conference on Control and Robotics Engineering (ICCRE), Mar 2017There are presently approximately 500 million live, buried mines in about 70 countries, which present a major threat to lives and cause economic problems. It is a matter of deep concern because it’s difficult to detect the mines when their exact position is unknown. Though governments of affected countries take this matters seriously, there is no significant progress as they are still practicing manual methods from decades ago. It would take almost hundreds of years if we kept relying on those manual methods because of wide variety of mines with tremendous diversity of terrains. The purpose of this paper is to design a mapping robot system prototype (MinerBot) using microcontroller and sensors which is capable of mapping any surface with depth value in real time which will then help in mine detection. MinerBot can move in any direction and collect information using ultrasonic sensor, which is connected to a microcontroller then it was passed on to the mapping function in MATLAB via socket communication. MinerBot is autonomous, efficient and cheap.

@inproceedings{bashar20172d, title = {2D surface mapping for mine detection using wireless network}, author = {Bashar, M Raihanul and Al Arabi, Abul and Tipu, Rayhan Sardar and Sifat, M Tanvir Alam and Alam, M Zobair Ibn and Amin, M Ashraful}, booktitle = {2017 2nd International Conference on Control and Robotics Engineering (ICCRE)}, pages = {180--183}, year = {2017}, doi = {10.1109/ICCRE.2017.7935066}, bibtex_show = true, organization = {IEEE}, copyright = {All rights reserved}, dimensions = {true}, langid = {english}, keywords = {Stereo Vision, Movable multi-cameras, distance measurement, robotic vision, image processing, humanoid vision, self calibration}, preview = https://res.cloudinary.com/dqkxtivbq/image/upload/v1751598102/stereo_foixy9.png, } - Effect of EMG artifacts on video category classification from EEGMohammad Raihanul Bashar, Rayhan Sardar Tipu, Aunnoy K Mutasim, and M Ashraful AminIn 2017 6th International Conference on Informatics, Electronics and Vision & 2017 7th International Symposium in Computational Medical and Health Technology (ICIEV-ISCMHT), Mar 2017

EEG (electroencephalography) signals are highly susceptible to noise. Mixture of artifacts like EOG (electrooculography) and EMG (electromyography) with the EEG signals are inevitable. In this paper, we present our findings of the effects of EMG artifacts on EEG signals to categorize videos. The experiments suggest that for video category classification using EEG, signals with EMG artifacts have more discriminating capacity.

@inproceedings{bashar2017effect, title = {Effect of EMG artifacts on video category classification from EEG}, author = {Bashar, Mohammad Raihanul and Tipu, Rayhan Sardar and Mutasim, Aunnoy K and Amin, M Ashraful}, booktitle = {2017 6th International Conference on Informatics, Electronics and Vision \& 2017 7th International Symposium in Computational Medical and Health Technology (ICIEV-ISCMHT)}, pages = {1--4}, year = {2017}, doi = {10.1109/ICIEV.2017.8338603}, bibtex_show = true, organization = {IEEE}, copyright = {All rights reserved}, dimensions = {true}, langid = {english}, keywords = {EEG, EMG, BCI, HCI, Multimedia Classification}, } - Implementation of Low Cost Stereo Humanoid Adaptive Vision for 3D Positioning and Distance Measurement for Robotics Application with Self-CalibrationAbul Al Arabi, Rayhan Sardar Tipu, Mohammad Raihanul Bashar, Binoy Barman, Shama Ali Monicay, and Md Ashraful AminIn 2017 Asia Modelling Symposium (AMS), Mar 2017

Robots are getting smarter everyday with the implementation of computer vision system in it. It is now highly required for any robot to have a natural vision system or more likely humanoid vision system to interact with real life incidents. On the perspective of such imaging and vision, we propose an efficient method in order to determine the absolute view point of any desired image location. We used self calibration system and humanoid vision mechanism via stereo cameras to find the region of convergent of an object which with the help of a mathematical model can measure the distance of the object. With comparing different objects position it is also possible to determine the relative distance of the objects. Our system shows that, the real human eye tracking system used, can be possible for getting a realistic view of the image at the 3D point positioning.

@inproceedings{al2017implementation, title = {Implementation of Low Cost Stereo Humanoid Adaptive Vision for 3D Positioning and Distance Measurement for Robotics Application with Self-Calibration}, author = {Al Arabi, Abul and Tipu, Rayhan Sardar and Bashar, Mohammad Raihanul and Barman, Binoy and Monicay, Shama Ali and Amin, Md Ashraful}, booktitle = {2017 Asia Modelling Symposium (AMS)}, pages = {83--88}, year = {2017}, doi = {10.1109/AMS.2017.21}, bibtex_show = true, organization = {IEEE}, copyright = {All rights reserved}, dimensions = {true}, langid = {english}, keywords = {Microcontroller based Robot, Multi-sensor devices, Metal Detection Sensor, MATLAB, Surface Mapping, 2D mapping, Mine detection}, }